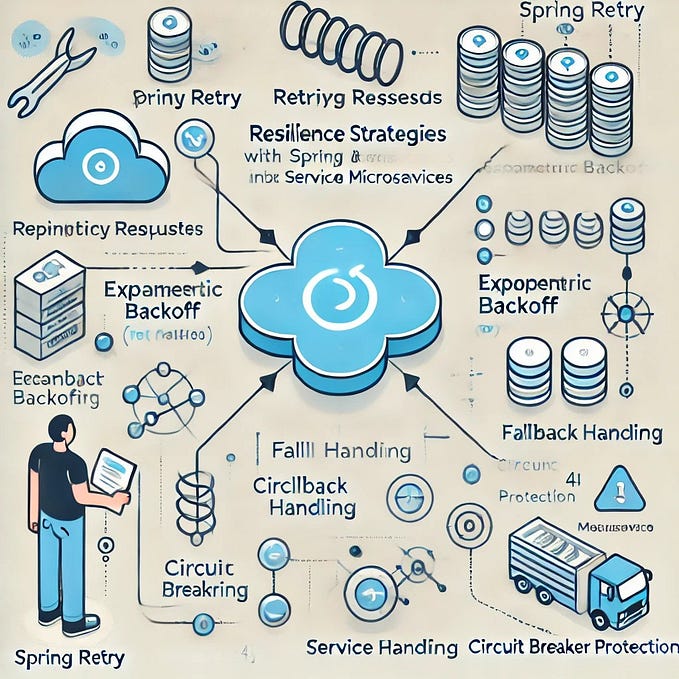

🚨 Understanding the Circuit Breaker Pattern to Prevent Cascading Failures in Microservices 🚨

In the world of microservices, we often deal with multiple services communicating with each other. One service might rely on another for data or functionality. But what happens if one of these services fails or becomes temporarily unavailable? If we keep sending requests to an unresponsive service, it can lead to wasted resources and slow performance. That’s where the Circuit Breaker Pattern comes in.

Let’s break down the Circuit Breaker Pattern step by step, starting with basic concepts like threads and thread pools. Then, we’ll move on to understanding how circuit breakers work, their states, and why they are crucial in making our systems more resilient.

Threads and Thread Pools

Before diving into circuit breakers, let’s first understand what happens when you use a service.

Imagine you have an app, and each time a user interacts with it, the app creates a thread. A thread is like a worker in a factory. Each thread does one task, like fetching information from a microservice. If many users come at once, the app creates more threads to handle the requests.

However, creating too many threads can overwhelm the system. That’s where the thread pool comes into play. Instead of creating a new thread for each task, the thread pool has a limited number of threads ready to work. When a task is done, that thread becomes available for the next task. This approach helps to manage resources efficiently.

But what if one of the services that these threads depend on becomes unavailable? Now, let’s move on to the circuit breaker pattern to see how it helps in such situations.

The Problem: When a Microservice Fails

In a microservice architecture, services talk to each other all the time. Imagine a situation where Service A calls Service B to get some data, but suddenly, Service B is down or responding very slowly. If Service A keeps making calls, it will wait for Service B to respond. This wait can take time and resources, like holding up those threads we talked about. Over time, this can slow down the entire system and even cause Service A to crash as well.

This is where the Circuit Breaker Pattern comes in.

What Is the Circuit Breaker Pattern?

The Circuit Breaker Pattern is a design pattern used to prevent an application from continuously trying to call a service that is failing. It works similarly to an electrical circuit breaker in your home. If something goes wrong, like a short circuit, the breaker cuts off the electricity to prevent damage. In the same way, the circuit breaker in a software system stops sending requests to a failing service until it is back to normal.

Think of it as a safety switch for your services.

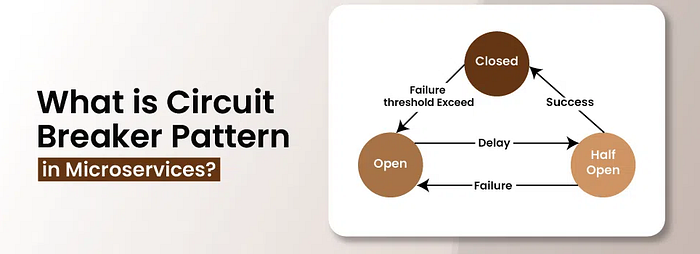

The Three States of a Circuit Breaker

The circuit breaker has three states:

- Closed State: This is the normal state. Everything is working fine, and the circuit breaker allows requests to flow freely between services. If Service A calls Service B, and it responds successfully, the circuit breaker remains in the Closed State.

- Open State: When the circuit breaker detects that Service B is failing (after a certain number of failed attempts), it moves to the Open State. In this state, the circuit breaker stops making requests to Service B completely. This prevents further failures from piling up and wasting resources.

- Half-Open State: After some time, the circuit breaker decides to test if Service B is working again. It will allow a limited number of test requests to go through. If these requests are successful, the circuit breaker moves back to the Closed State. If the requests still fail, it goes back to the Open State.

How Does the Circuit Breaker Work?

Let’s imagine you have a service that tracks book information (like in the previous blog examples). This service depends on another microservice to get ISBN numbers. If the ISBN service fails and your book service keeps trying to get the data, the circuit breaker can help:

- Closed State: At first, the book service sends requests to the ISBN service, and everything works fine. The circuit breaker remains closed.

- Open State: If the ISBN service suddenly stops responding (say, after 3 failed requests), the circuit breaker moves to the open state. Now, instead of sending more requests to the failing ISBN service, it returns a default response or an error message, saving time and system resources.

- Half-Open State: After a few minutes, the circuit breaker tests the ISBN service with a few requests. If the service works again, the circuit breaker closes, and everything goes back to normal. If the service is still down, the circuit breaker stays open, and no further requests are made.

Why Is This Important?

Without a circuit breaker, the book service would keep calling the ISBN service, wasting resources and slowing down the entire system. By using the circuit breaker, you make your application more resilient and prevent failures from cascading through the system.

What is Cascading Failure?

In a microservice architecture, services communicate with each other over a network. Let’s imagine a scenario:

- Service A relies on Service B.

- Service B relies on Service C.

If Service C fails and Service B keeps trying to call it, Service B might get overwhelmed with unhandled errors or slow responses. This, in turn, affects Service A since Service B becomes unresponsive. Eventually, Service A might fail, even though it wasn’t directly dependent on Service C.

This chain of failures is what we call a cascading failure. It can spread across the system, causing major disruptions and downtime.

How the Circuit Breaker Pattern Helps

To avoid cascading failures, we use the Circuit Breaker Pattern. When Service C becomes unresponsive, the circuit breaker opens, stopping Service B from repeatedly trying to communicate with it. This prevents Service B from becoming overloaded and keeps the failure contained. Over time, the circuit breaker will test if Service C is available again (in the half-open state), and if everything is back to normal, it will close and allow communication again.

Practical Example of Circuit Breaker Pattern with Code

In this example, we will create two microservices: OrderService and PaymentService. OrderService will depend on PaymentService to process payments. If PaymentService fails, OrderService will implement the circuit breaker pattern to prevent cascading failures.

We will use Quarkus and MicroProfile Fault Tolerance to demonstrate how to use annotations like @CircuitBreaker, @Retry, @Fallback, and @Timeout.

1. Setting up the Payment Service

Let’s start by setting up the PaymentService, which will simulate payment processing.

package com.example.payment;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import java.util.Random;

@Path("/payment")

public class PaymentResource {

@GET

@Produces(MediaType.TEXT_PLAIN)

public String processPayment() {

// Simulating a failure in 50% of requests

if (new Random().nextBoolean()) {

throw new RuntimeException("Payment service failed");

}

return "Payment successful";

}

}Here’s what’s happening:

- We have a simple REST endpoint

/payment. - 50% of the time, this service throws an exception, simulating failure.

2. Setting up the Order Service

Now, we’ll create the OrderService, which depends on the PaymentService. We’ll implement the Circuit Breaker Pattern to protect OrderService from cascading failures in PaymentService.

package com.example.order;

import jakarta.inject.Inject;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

import org.eclipse.microprofile.faulttolerance.CircuitBreaker;

import org.eclipse.microprofile.faulttolerance.Fallback;

import org.eclipse.microprofile.faulttolerance.Retry;

import org.eclipse.microprofile.faulttolerance.Timeout;

import org.eclipse.microprofile.rest.client.inject.RestClient;

@Path("/order")

public class OrderResource {

@Inject

@RestClient

PaymentService paymentService;

@GET

@Produces(MediaType.TEXT_PLAIN)

@CircuitBreaker(

requestVolumeThreshold = 4, // The number of consecutive failures to open the circuit

failureRatio = 0.75, // The percentage of failed calls to trigger the circuit breaker

delay = 5000 // The time in milliseconds before transitioning to half-open

)

@Retry(maxRetries = 3, delay = 1000) // Retries failed calls up to 3 times with a 1-second delay

@Timeout(2000) // Maximum time to wait for a response before timing out

@Fallback(fallbackMethod = "fallbackPayment") // Fallback method if the circuit breaker is open or fails

public String placeOrder() {

return paymentService.processPayment();

}

// Fallback method if the payment service is down

public String fallbackPayment() {

return "Payment service is currently unavailable. Please try again later.";

}

}Explanation of Annotations

@CircuitBreaker: This annotation prevents cascading failures by "breaking the circuit" if too many requests to the PaymentService fail. Here’s what the parameters do:requestVolumeThreshold: This sets the number of requests to evaluate before deciding whether to open the circuit. In this case, after 4 failed requests, the circuit breaker opens.failureRatio: This is the percentage of failed requests required to open the circuit. A failure ratio of0.75means 75% of the requests must fail before the circuit opens.delay: The time (in milliseconds) the circuit stays open before transitioning to the half-open state to test the service again.@Retry: This annotation defines how many times OrderService will retry the failed request before giving up. Here, it will retry up to 3 times with a 1-second delay between attempts.@Timeout: Specifies how long the OrderService will wait for a response from the PaymentService before timing out. If PaymentService takes more than 2 seconds to respond, it will fail.@Fallback: If the circuit breaker opens or all retries fail, the fallback method (fallbackPayment()) will be called. This method returns a user-friendly message that the payment service is down.

3. Payment Service REST Client

OrderService uses a REST client to call PaymentService. Let’s define that:

package com.example.order;

import org.eclipse.microprofile.rest.client.inject.RegisterRestClient;

import jakarta.ws.rs.GET;

import jakarta.ws.rs.Path;

import jakarta.ws.rs.Produces;

import jakarta.ws.rs.core.MediaType;

@RegisterRestClient(configKey = "payment-service")

@Path("/payment")

public interface PaymentService {

@GET

@Produces(MediaType.TEXT_PLAIN)

String processPayment();

}@RegisterRestClient: Registers this interface as a REST client for calling the PaymentService.- The

configKeypoints to the configuration in theapplication.propertiesfile that defines the URL of the PaymentService.

4. Configuration in application.properties

Finally, we need to configure the URL for the PaymentService in OrderService:

payment-service/mp-rest/url=http://localhost:8081This points the OrderService to the PaymentService running on port 8081.

What Happens in Practice?

When a client calls the /order endpoint:

- OrderService calls PaymentService.

- If PaymentService fails:

- OrderService retries up to 3 times.

- If it still fails, the Circuit Breaker opens and stops further calls to PaymentService.

- While the circuit breaker is open, the fallback method is called, returning a user-friendly message.

- After 5 seconds (the delay set in the

@CircuitBreaker), the circuit breaker moves to the half-open state, sending test requests to see if PaymentService is back up.

Conclusion

The Circuit Breaker Pattern is essential for preventing cascading failures in microservices. It ensures that a failure in one service doesn’t overwhelm others and helps the system recover gracefully. Through annotations like @CircuitBreaker, @Retry, @Fallback, and @Timeout, we can make our microservices more resilient and fault-tolerant.

This practical example shows how easy it is to implement a circuit breaker with modern frameworks like Quarkus and MicroProfile Fault Tolerance. By protecting your services with a circuit breaker, you prevent unnecessary failures and improve your system’s reliability.